Bouldering and Computer Vision

Working Log

December 31, 2025

I created Crux Beta Labs and will be writing more notes there.

December 18, 2025

A friend of mine shared this (OpenCap: Musculoskeletal forces from smartphone video) with me today, and I was excited because a few months ago I was also trying to do the same thing: simulate how muscles are used when climbers do movements via musculoskeletal modeling on OpenSim.

Thinking about it in the context of climbing: when you are not going to do some tricky dyno/coordo moves, you must have said, "Why can't I do that?" or "I'm just not strong enough." But what if we had a way to know which part of their strength a climber is lacking so they would know what to train? I think OpenCap is a great proof of concept that bringing computer vision tools into climbing training has a great future, although the current user experience of this project is terrible in terms of guidance, setup, and so on. Still, it's really nice to see this project and put the topic into practice.

Nov 21, 2025

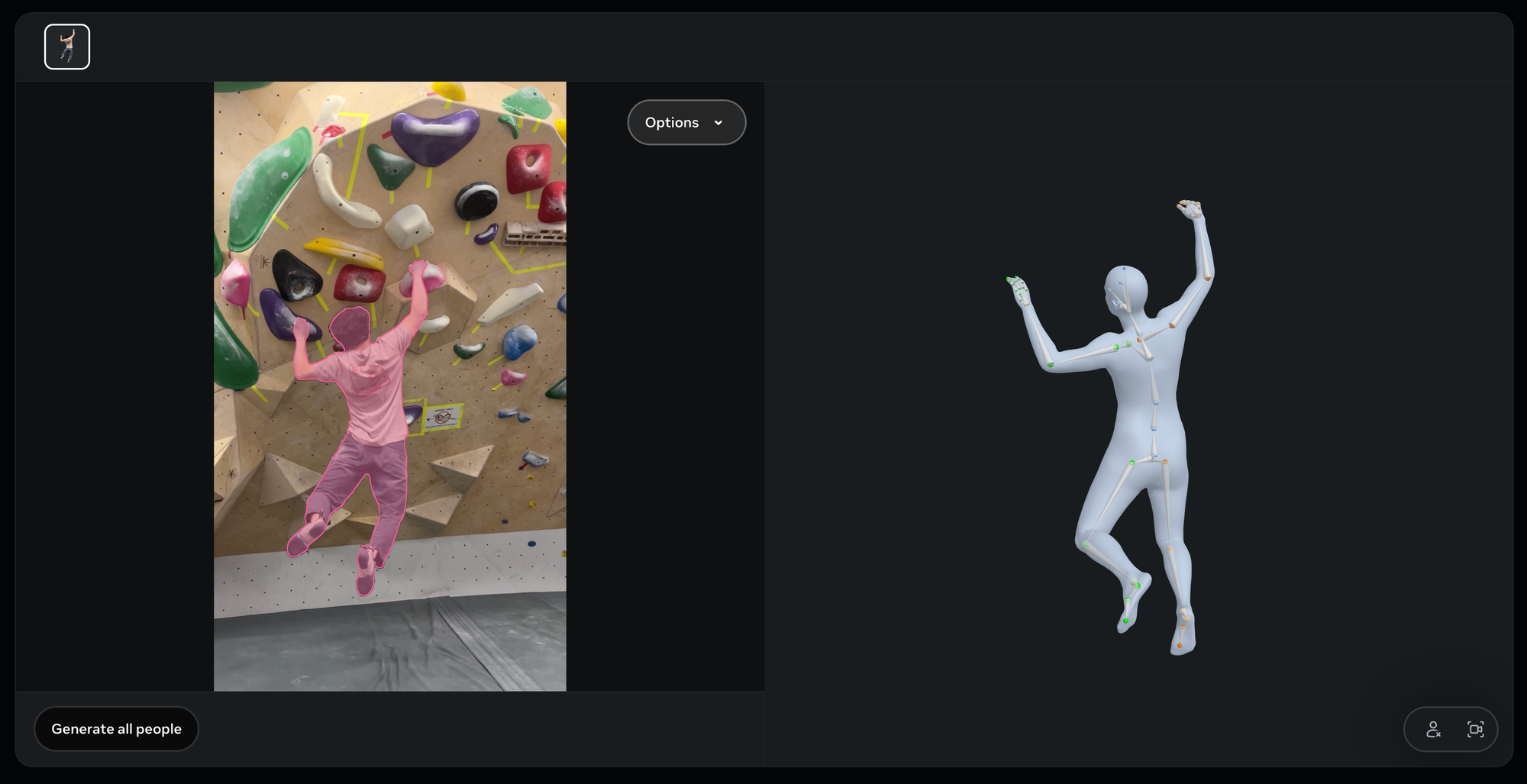

The time to estimate the 3D model of any person in a picture is just impressive:

Summer 2025

Climbing Analysis Toolbox is on GitHub! This will be a set of computer vision tools for processing and analyzing climbing videos that I'll update from time to time.

May 15, 2025

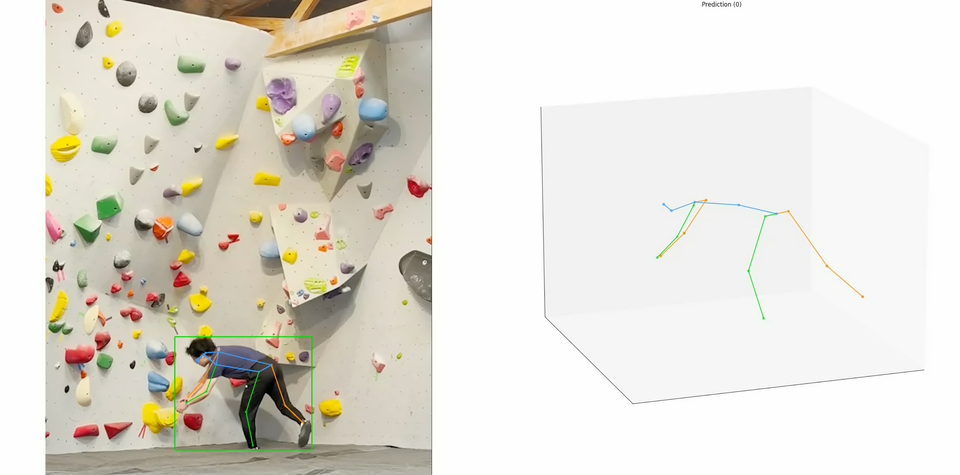

I've been doing a set of new analyses using a pose estimator recently.

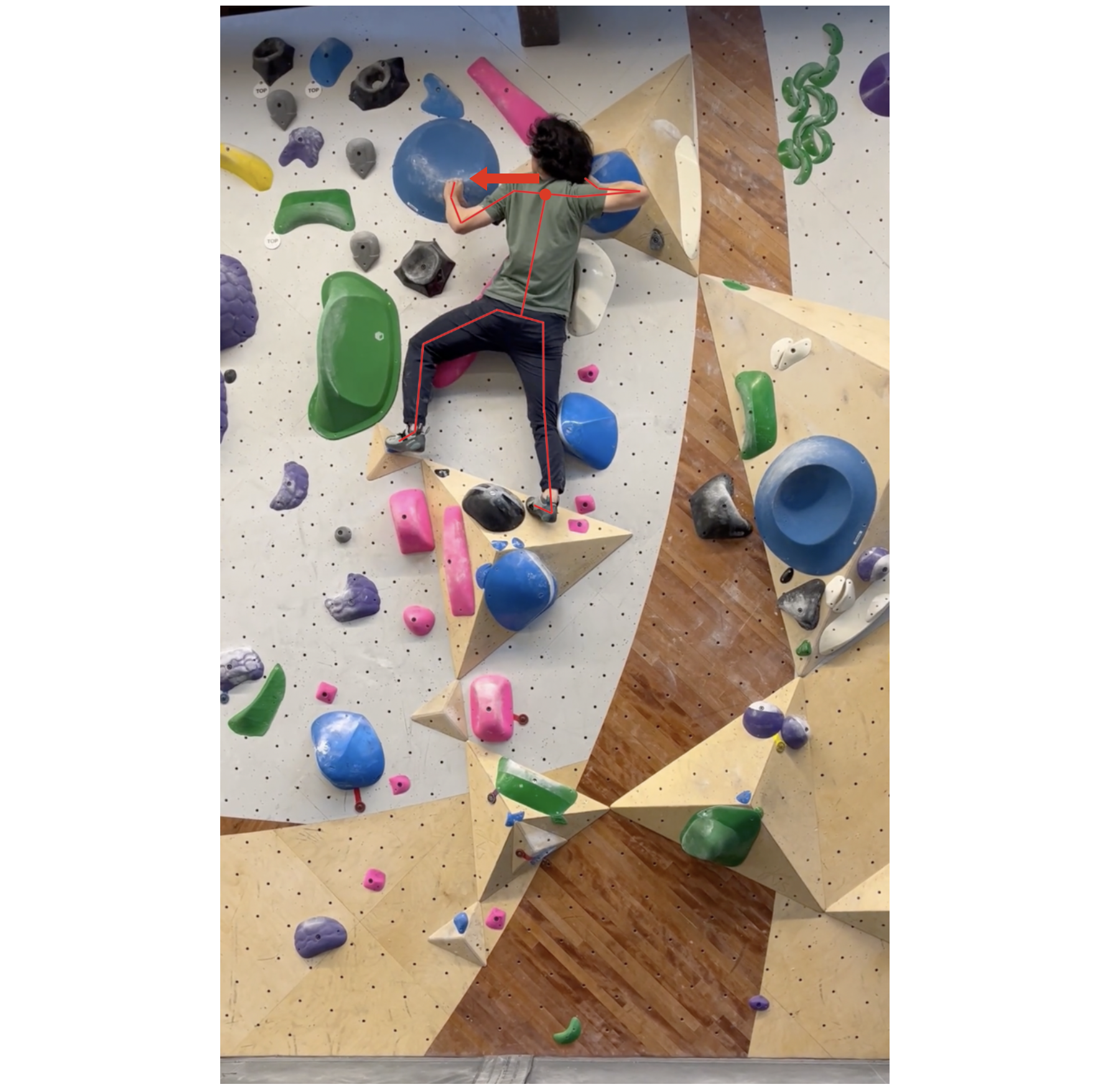

A couple of days ago, I was chatting with Kevin, one of the climbers at Urbana Boulders, about his new moves on the barrel wall (curved and overhanging). The move is quite interesting and is not a typical swing that you may find in a climb with a lache or campus sequence. Here is the move:

- The climber uses two jugs that are relatively far apart and starts swinging to the left.

- This creates a pendulum swing that helps the climber generate the correct swing direction to the right.

- Once the climber is near the highest possible position on the left (before swinging back), they need to swap the left hand to the hold that's near the right-hand hold–this helps the climber further generate power to go to the top right direction.

- It's a coordination sequence, using both the momentum generated from the hips and the power from both hands.

- Finally, the climber catches the last jug, which is the top hold.

It's more intuitive to understand when you watch the move yourself in the video:

It's probably a straightforward move, but with coordination and power that beginner or intermediate climbers might need some time to adapt to. In the video, I have the person's body estimation skeleton overlaid on each climber. I specifically marked the point for hips, and added a 3D velocity vector to visualize how each climber moves towards the top-right (final) hold.

From this, I've written a Python script and put it on GitHub as the first tool I added to a repo called "Climbing Video Analysis," where in the future I want to post more helpful and meaningful methods that might help climbers understand why their movements may not work–and perhaps provide insights on how to improve through trial and error.

Below is another video analysis of another climb, where I was having trouble with the dyno start move. I've incorporated all six videos—two successful and four failed attempts (all videos are pre-processed so that the positions of the holds are aligned)—so you can see the different trajectories from all six tries.

April 22, 2025

This year's first World Cup at Keqiao, I was amazed by how Do-Hyun Lee and Sorata were able to flash some climbs that other competitors seemed to struggle with during the semi-final and final. While watching the competition, for some moves that require a lot of tension and have different interesting (but brutal) beta, I thought about whether it is possible to visualize the amount of pressure or muscle tension when the players are doing the moves using a scaled-range color map. For example, one of Lee's moves involves a gaston on two extremely sloper surfaces, and would it be possible to visualize the amount of tension distributed on his two arms (wrist, fingers, shoulder, arms, back) to give the audience a more intuitive sense of how hard that move is.

January 22, 2025

While I was climbing a few Mosaic V8-9 problems recently, I started to notice several things, including (1) how I always managed to keep at least three limbs on either the holds or the volume/wall to maintain an efficient posture to continue the climb, and (2) specifically, how different joint angles might affect the difficulty of the next moves. From this point, I think many climbing problems could probably be treated as an optimization problem. Each climber has different body metrics (weight, height, arm span, leg span, flexibility, muscle strength and endurance, etc.), whereas climbs (or bouldering problems) are fixed. It's possible to model the relationship between body metrics and climbs mathematically to find the most efficient beta. I'm still experimenting with this idea; but first, I probably need to figure out how to optimize the estimated poses from the model to be more fluent and possibly more accurate in order to build a good dataset for the modeling phase. One potential idea is to smooth out the difference between the prior and current poses in each corresponding video frame.

On another note, I’ve recently started analyzing hard climbs more thoughtfully. It occurred to me that the "guessing the grade" trend on social media is somewhat ambiguous (I know it’s a meme, but I do want to take it seriously). Judging solely from a 2D view of the send video isn’t enough to identify the crux of the climb (not to say each climber probably have different cruxes). So, I’ve started by making more detailed annotations on the climbs.

It's not perfect at the moment, but hopefully, I’ll be able to present the details in a more intuitive and analytical way in the future. For example, I could insert a zoom-in shot (either a video or a view from the 3D constructed scene) for each crux. Or, compare failed attempts with the successful attempt and highlight the key adjustments that led to the successful send.

April 16, 2024

As per my previous post, I have begun delving into the topic of combining computer vision and deep learning techniques with my recent hobby, indoor climbing, specifically bouldering. I intend to use this page as a comprehensive note to collect what I have discovered regarding this topic and to potentially further develop real-world algorithms and applications. As I have almost no experience in outdoor climbing, all terms related to climbing mentioned here are in reference to indoor climbing.

Thoughts & Possible Research Directions

There are two general goals, which I think climbers maybe benefits from enhancing their bouldering experience in meaninful ways; not just fancy pose estimation.

- Tutoring on bouldering moves for beginner climbers.

- Recording bouldering moves in a quantified-self manner.

But of course, these are only my own opinions so far. I plan to gather more feedback from the climbers around me to discuss how they would feel about incorporating technologies in climbing, whether it's good or bad. As for the second point, I've noticed that current apps for climbing journaling have a lot of room for improvement in terms of UI; and incorporating computer vision techniques can better enhance the user experience. Well, some may also ask, why not just use a notebook? I don't have an answer for that.

To achieve these two goals, there are some detailed (and interesting) problems to be explored or further improved based on existing projects, products, or literature.

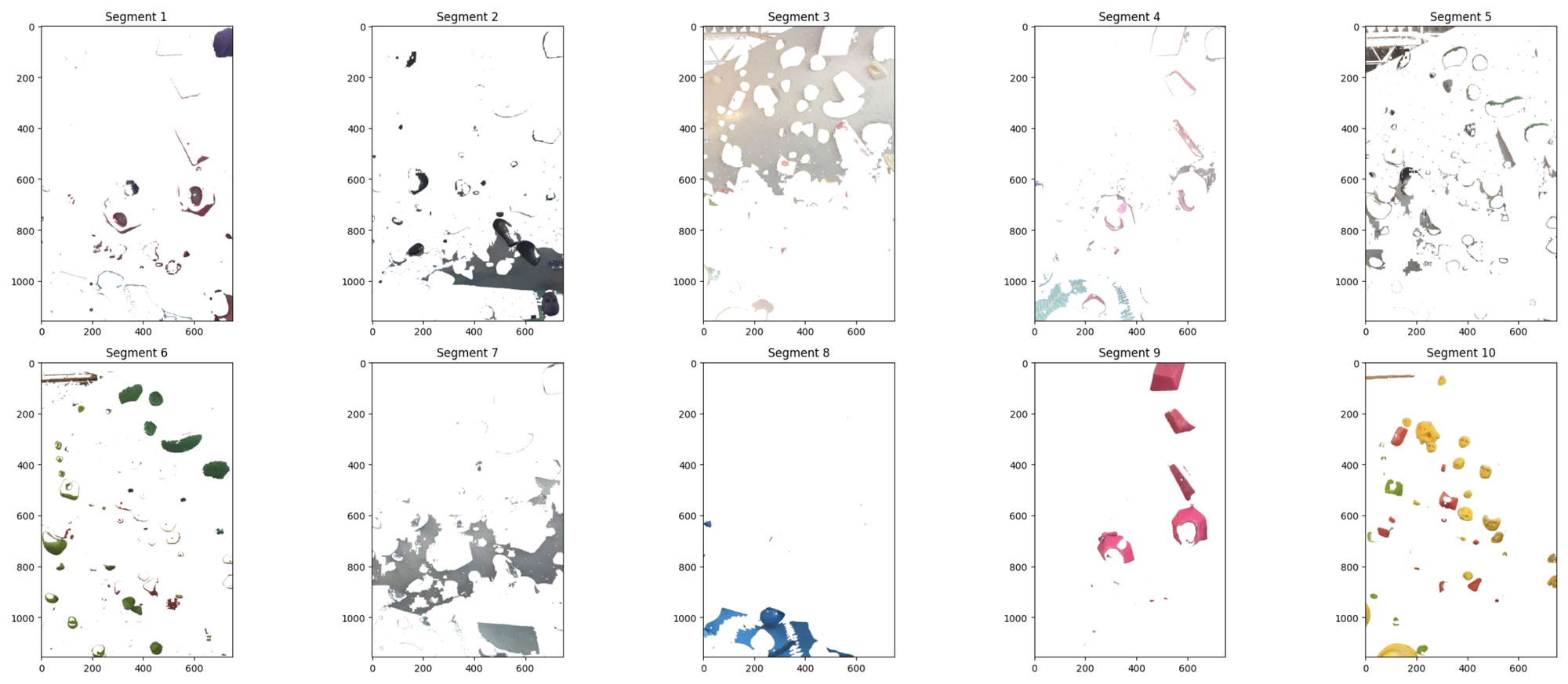

Bouldering Holds Segmentation

This idea involves attempting to extract bouldering holds with different colors and shapes from a specific photo in 2D image space. The output would identify the type of a certain hold and understand which holds belong to which route. One naive solution might involve using k-means clustering in HSV or Lab color space, but some prefer using machine learning models trained on ground truth labeled data to perform dense prediction afterward.

I've also considered whether automatically generating bouldering routes is a good idea. However, at the moment, I believe that without creativity and human interaction, purely randomly generated routes have no meaningful value, and you lose the fun in climbing. This topic will be discussed further in the future.

Some relevant resource I've collected so far regarding this subsection:

- Computer Vision Based Indoor Rock Climbing Analysis

- NeuralClimb: Using computer vision to detect rock climbing holds and generate routes

- Climb-o-Vision: A Computer Vision Driven Sensory Substitution Device for Rock Climbing

- MetaHolds: A Rock Climbing Interface for the Visually Impaired

- Trying Out Contours on a Climbing Wall using OpenCV

- Bouldering holds dataset:

3D Reconstructed Bouldering Routes

As you may have seen in many IFSC videos, when the competition climber takes the stage, there is typically an animation beforehand showing what the route looks like. The idea here is to reconstruct the entire 3D scene from a user's captured photos, for later use in simulation or route analysis. One possible solution is to use Gaussian splatting for precise reconstruction, and an existing application that all users can try is Luma Studio.

- NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis

- COLMAP-Free 3D Gaussian Splatting

- MotionBERT: A Unified Perspective on Learning Human Motion Representations

- MotionAGFormer: Enhancing 3D Pose Estimation with a Transformer-GCNFormer Network

- Real-Time and Accurate Full-Body Multi-Person Pose Estimation & Tracking System

Center-of-mass (CoM) Analysis

The goal is to analyze the CoM based on 3D pose estimation, to determine how climbers might benefit from adopting different postures to improve their balance and reach the next hold.

$$C=\frac{1}{M'}\sum_{i=1}^{M'}w_i\mathbf{j}_i$$

where $\mathbf{j}_i$ represents the 3D positions of the mid-points between two connected joints (e.g., we define the CoM of a left lower arm as a mid-point between a hand joint and an elbow joint), and $M'$ denotes the number of the mid-points.

- Climbing Technique Evaluation by Means of Skeleton Video Stream Analysis

- 3D analysis of the body center of mass in rock climbing

- 3D Visualization of Body Motion in Speed Climbing

- 3D Human Pose Estimation via Intuitive Physics

had fun climbing a V4-5 slab today at Mosaic Berkeley. It was a bit of a bummer because I almost finished it using my own approach. However, I only managed to touch the second-to-last hold before losing my balance.

While I didn't have any energy left to physically validate my next idea for that movement before falling off, I wonder if applying some CoM analyses in a virtual 3D scene would be useful.

What I imagine is that after the pose estimation is applied to the video, we will have 3D coordinates for the body (and also the center of mass). Wouldn't it be nice to let the user drag a certain joint or part of the pose to another location to see if it falls off? In other words, this would allow us to validate the idea for that movement virtually without having to climb the wall. This is also relevant to the "Virtual Agent for Learning Progress Simulation" section. We could set a CoM goal for the virtual agent to learn to reach using reinforcement learning, allowing it to learn how to avoid falling off the wall through countless simulations.

Bouldering Routes Evaluation

This idea revolves around exploring possibilities of "solving" bouldering routes or evaluating the difficulties of certain routes. While grading can sometimes be difficult for humans to evaluate, this challenge could lead to making grading more quantifiable.

Some existing works:

Virtual Agent for Learning Progress Simulation

This may seem absurd to some, but the essence of this idea is to apply deep reinforcement learning to potentially predict how a climber may progress and become better, based on existing quantifiable metrics such as finger/arm/core strength or flexibility. Imagine duplicating a climber's biometric data (finger strength, height, arm length, etc.) in a simulated world and allowing the agent to simulate the beginner climber's movements through hundreds of thousands of iterations, generating an evaluation report or even a training plan. This idea is still in its early stages, and I have not yet delved into any specific details. Updates will follow in the future.

Real-to-Sim Skill Learning

Motivated by the paper from X. B. Peng et al. titled "SFV: Reinforcement Learning of Physical Skills from Videos," this idea involves utilizing deep reinforcement learning to study the skills demonstrated by world-renowned climbers such as Adam Ondra and Janja Garnbret. The goal is to compile a set of valuable information and instructions based on these climbers' movements into a knowledge base for learning purposes.

- SFV: Reinforcement Learning of Physical Skills from Videos

- SIGGRAPH Asia 2018: Skills from Videos paper (main video) on YouTube

- Computational climbing for physics-based characters

Relevant Applications

Last update: January, 2026

- Climbnet: "CNN for detecting + segmenting indoor climbing holds"

- Climbuddy developed by Tomáš Sláma is "using computer vision, machine learning, and our love for bouldering, we turn images into 3D models of the boulders, allowing you to: log your sends,. track your progress, and compete with others"

- Caliscope is "a GUI-based multicamera calibration package. It simplifies the process of determining camera properties to enable 3D motion capture."

- Hold Detector Computer Vision Model by ClimbAI: "It's a collection of images from bouldering gyms showing all sorts of holds and volumes climbers use."

- Red-Point "is the first ever Augmented Reality Climbing Guide that works with no internet connection."

- Climbalyzer "is an AI powered 3D body position and movement analysis app for coaches and self-coached climbers."

- Belay.ai: "AI that understands climbing." Here's a video where the Norwegian climber Magnus reviews their technologies .

- Valo Motion: "Gamify your climbing walls with our augmented climbing wall game platform."

- Boardclimbs: "a free to use spraywall logging webapp." I recently saw one of their stories where they released a feature for segmenting the holds from a user's picture. I haven’t tried it, but it looks interesting.

- Climbing Ratings is a "software that estimates ratings for the sport of rock climbing. The ratings can be used to predict route difficulty and climber performance on a particular route."

- BoulderVision: "Using Computer Vision to Assess Bouldering Performance" by Daniel Reiff

- OpenCap is "an open source software developed at Stanford University"

- 3D scanning softwares that utilize either the traditional photogrammetry method or the Gaussian splatting approach to virtually reconstruct physical scenes:

- Scaniverse by Niantics

- Luma 3D Capture by Luma AI

- nerf.studio, "a simple API that allows for a simplified end-to-end process of creating, training, and testing NeRFs."

- Other things relevant to this topic:

- Babylon.js is an open web rendering engine that uses WebGL. The latest Gaussian splatting feature may be helpful for quickly building a proof-of-concept 3D demo related to any of the ideas mentioned above.

- Also see huggingfaceg/splat.js

- OnlineObservation on SketchFab: "archive bouldering problem for RouteSetters, Gyms, Promoters, and Climbers." It contains the 3D meshes for the climbs that occurred in world-class climbing competitions. Thanks Hao-Chien for sharing this with me.

- "4D Gaussian Splatting for Real-Time Dynamic Scene Rendering" [CVPR 2024]. From the abstract, "[...] In 4D-GS, a novel explicit representation containing both 3D Gaussians and 4D neural voxels is proposed. A decomposed neural voxel encoding algorithm inspired by HexPlane is proposed to efficiently build Gaussian features from 4D neural voxels and then a lightweight MLP is applied to predict Gaussian deformations at novel timestamps."

- Babylon.js is an open web rendering engine that uses WebGL. The latest Gaussian splatting feature may be helpful for quickly building a proof-of-concept 3D demo related to any of the ideas mentioned above.